Chapter 9 Inference for Population Proportion(s)

Figure 9.1: A Sample of Statypi

The term for more than one platypus is (probably) platypuses, although this is slightly debated. The term platypus is derived from Greek and platypuses follows the established convention of plural forms of Greek nouns. Ironically, the Greek plural of platypus is platypodes. There is also a healthy minority of English speakers that prefer the informal term platypi.116

We are often interested in knowing what proportion of a population has a certain trait. That trait may be planning to vote yes on a certain proposition, a particular blood type, M&Ms that are blue, girls in different populations, or choosing to exercise at least three times per week. It is often unfeasible, if not impossible, to know parameters for a variety of reasons. Regardless, we want to know as much about the value of parameters as we can and this is the main goal of Inferential Statistics, that is inferring information about a population from the values of statistics from a sample.

For example, if you want to know if a certain proposition will be approved by voters in an upcoming election, we would need to know the population proportion for this trait. However, due to numerous factors, not the least of which is the fact that some people do not make up their mind until the actual moment they cast their vote, it is impossible to know this value in advance. So, we are forced to take a sample of the voters, and use this proportion to give our best guess as to whether the actual proportion of voters will allow for the proposition to pass.

New R Functions Used

All functions listed below have a help file built into RStudio. Most of these functions have options which are not fully covered or not mentioned in this chapter. To access more information about other functionality and uses for these functions, use the ? command. E.g., To see the help file for binom.test, you can run ?binom.test or ?binom.test() in either an R Script or in your Console.

We will see the following functions in Chapter 9.

binom.test(): This function can be used for testing that the proportions (probabilities of success) in one or several groups are the same, or that they equal certain given values.prop.test(): This function can be used for testing the null that the proportions (probabilities of success) in several groups are the same, or that they equal certain given values.

To load all of the datasets used in Chapter 9, run the following line of code.

load(url("https://statypus.org/files/StatypusCh9.RData"))9.1 Part 1: Distribution of Sample Proportions

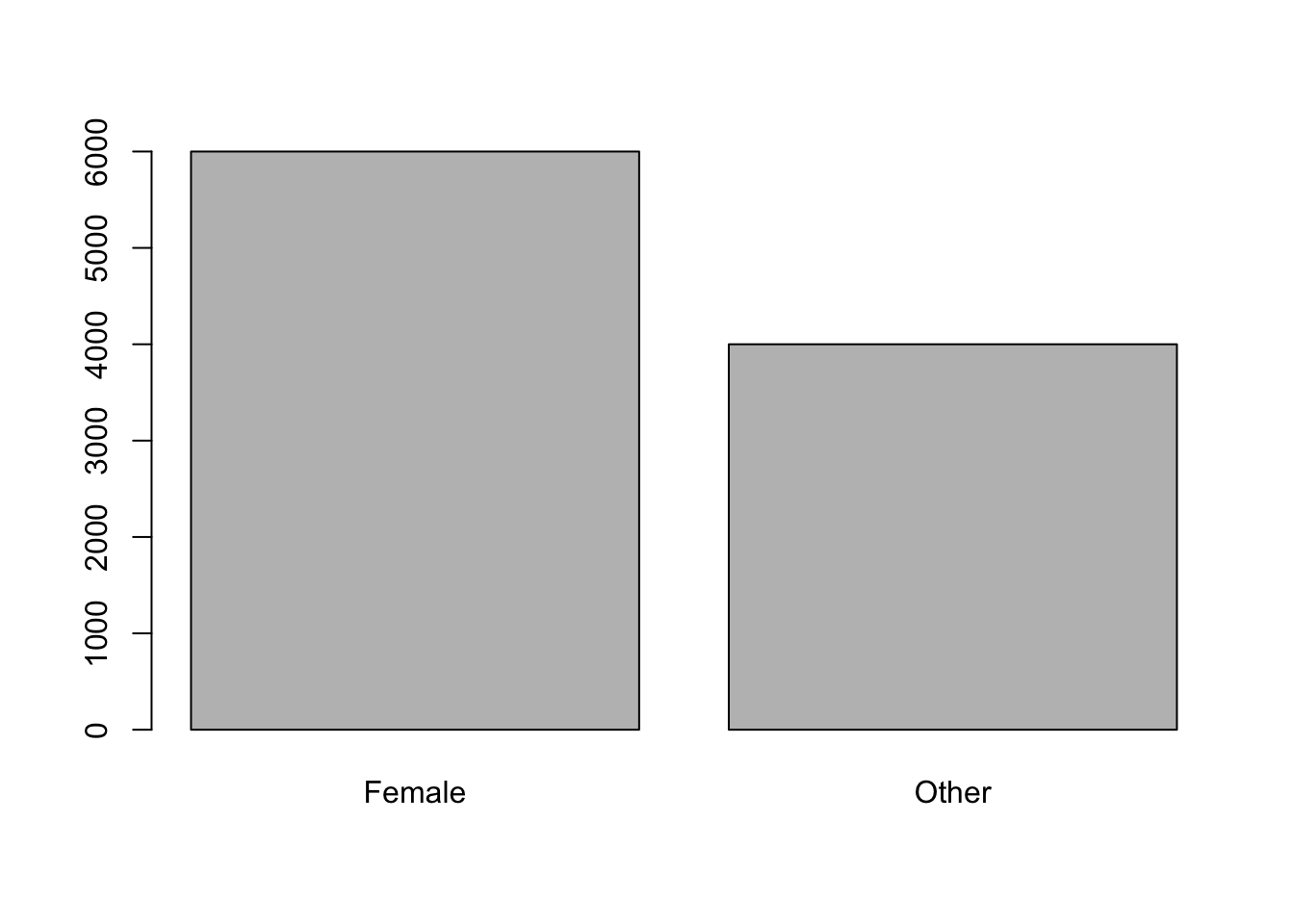

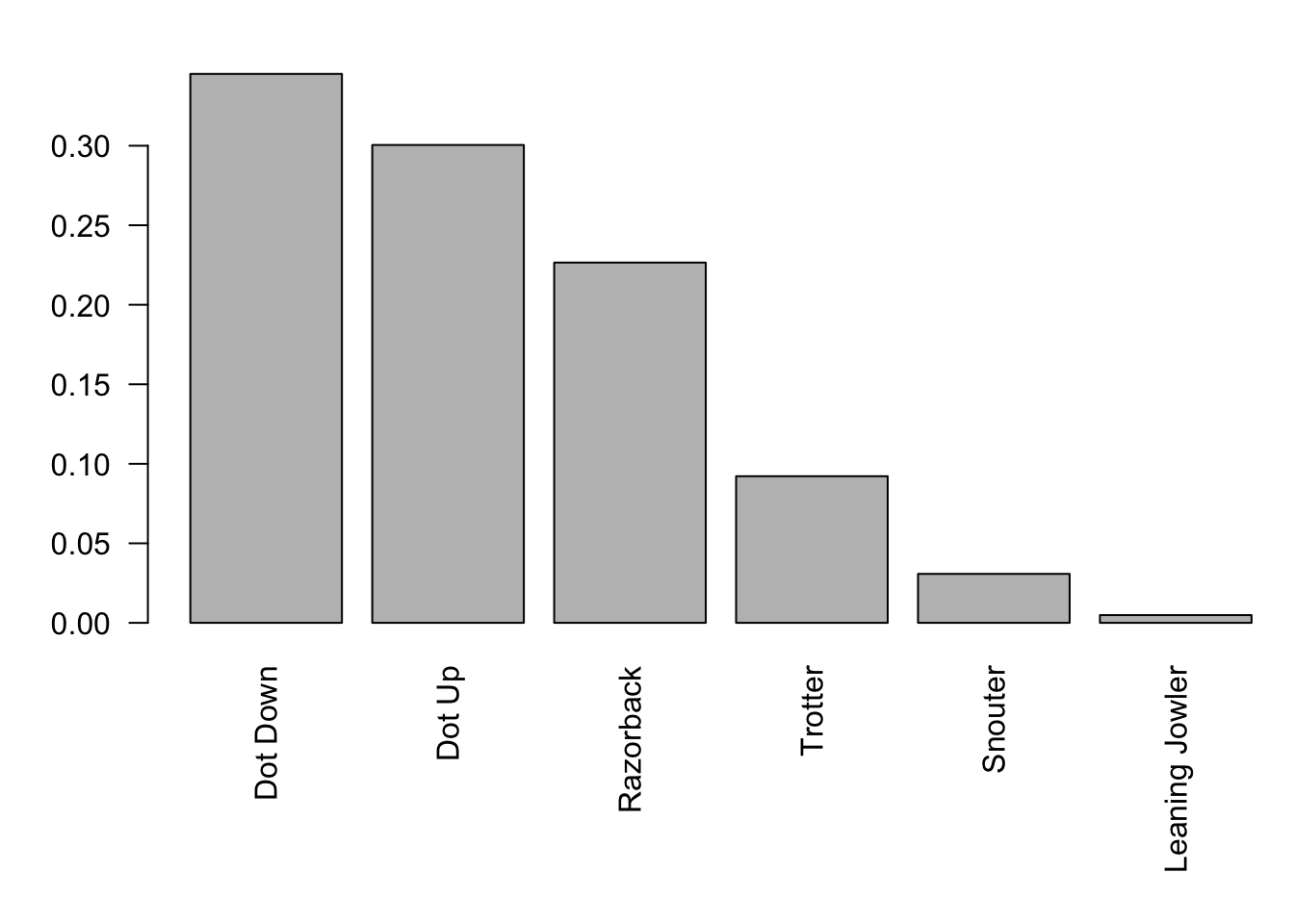

Suppose we are working with a university whose population is 60% female with 6000 females and 4000 people who do not identify as female. The population can be viewed by a bar plot as below.117

Figure 9.2: Bar Plot of University Genders

This is one population of individuals where each individual is an actual person at the university.

We now want to consider a different population that is created by sampling from the above population. The histogram says that we expect 30 out of every 50 students to identify as female. However, it is fairly obvious that if we took repeated different samples of size 50 from the university population that we wouldn’t always get 30 females. (Samples are allowed to share individuals from the university.) So, we now consider what sort of distribution the proportion of females from each sample would give. We expect that we would get that the proportion would be \(0.6 = \frac{30}{50}\), but it would not be shocking to get values such as \(0.58 = \frac{29}{50}\) or \(0.64 = \frac{32}{50}\). However, it would be fairly unlikely to get a sample which has a proportion less than \(\frac{1}{3}\). In fact, we will show that the probability of choosing a sample having a proportion of females less than \(\frac{1}{3}\) is less than six tenths of one percent of one percent, that is approximately 0.00006.118

9.1.1 Formal Setup

Definition 9.1 If we consider a population of size \(N\)119 where a proportion of individuals have a certain trait, this is a parameter known as the population proportion and is denoted as \(p\).

Actually attaining the value of \(p\) is often difficult, if not impossible, as it would likely require a census of the entire population. This was discussed in more detail in Section 2.1. We can get an estimate of this parameter by taking a sample and getting a statistic from it.

Definition 9.2 If we take a sample of size \(n\) and count the number of individuals, which we will call \(x\), which have the desired trait, then we can define the sample proportion to be

\[\hat{p} = \frac{x}{n}.\]

The sample proportion \(\hat{p}\) is the point estimate of the population proportion as it is our best guess for \(p\) given a single sample, but it is only a single point with no margin of error. This is a concept we will further investigate in this chapter.

Some readers may be wondering about why we choose to use \(p\) when Greek letters have been our mainstay for parameters. There is a Greek letter that is often used for the population proportion, but it is \(\pi\). It was decided that the pattern of the use of Greek letters for parameters was not as important as avoiding the almost certain confusion between \(\pi\) being the population proportion (and thus necessarily between 0 and 1) and the constant ratio of a circle’s diameter to its radius (\(\approx 3.14159\)).

In addition, there is a parameter that we did not introduce in Chapter 5. We defined \(r\) to be the correlation coefficient between two sets of data. However, more formally, \(r\) is the sample correlation coefficient, and thus a statistic. \(r\) is a point estimate of the population correlation coefficient which is traditionally denoted by the Greek letter \(\rho\), pronounced the same as “row” like “row your boat.” That is \[r \approx \rho.\] This is explored in Chapter 12 when we do inferential statistics on the least squares regression line.

9.1.2 Notation

Definition 9.3 We summarize the notation we have at this point.

- Parameter: A numerical value that describes some characteristic of a population.

- Census: The process of collecting information from an entire population.

- \(N\): The population size. [Note: May be infinite.]

- \(p\): The population proportion.

- Statistic: A numerical value that describes some characteristic of a sample.

- Survey: The process of collecting information from the sample of a population.

- \(n\): The sample size.

- \(x\): The number of individuals in a sample with the desired trait.

- \(\hat{p}\): The sample proportion, our point estimate of \(p\).

- Point estimate: A single value derived from statistics used to estimate a parameter.

Example 9.1 Suppose we want to find the proportion of M&M candies that are primary colored. That is, what proportion, \(p\), of M&Ms are Red, Yellow, or Blue. We can consider the population to have \(N = \infty\) as hundreds of millions of M&Ms are made every single day and the rate has been growing each year. If you were to be handed a Fun Size bag of Milk Chocolate M&M Candies by your professor and the bag contained 16 total candies of which 7 are primary colored, then we would get that

\[\hat{p} = \frac{7}{16} = 43.75\%\] This value is our point estimate of \(p\) and would be our best guess if we had to pick a single value. It seems clear that the probability that \(p\) is EXACTLY \(\frac{7}{16}\) is likely very small, if not 0, so we will need to include the concept of a Margin Of Error to have any chance of making a true inference (guess) about \(p\). We will do this in Section 9.2 and we will return to this example as we develop more tools.

9.1.3 Set Up and Theory

To understand how close our point estimate, \(\hat{p}\), is to being the parameter, \(p\), we must first understand the population of all possible cases of \(\hat{p}\). That is, we will now consider a population where individuals are values of \(\hat{p}\). That is we consider all possible different samples of the population and take the value of \(\hat{p}\) from each sample to be the individuals of a new population.

It only makes sense to compare samples of the same size, so we will assume that all samples are of an arbitrary size of \(n\).120 If we look at any one individual in the sample, there is a probability of \(p\) that that individual the particular trait we are interested in. To simplify linguistics and draw a connection, we will say that an individual that has the particular trait is a “success” and one that does not have the trait is a “failure.” That is, there is a probability of \(p\) that the first individual is a “success.” If \(n\) is significantly less than \(N\),121 then the selection of each subsequent individual will still result in a probability of \(p\) of finding a “success.”122 That is, we have \(n\) independent individuals who each result in either a “success” or “failure.” For example, if we were to consider the university mentioned at the beginning of this chapter and imagine we are expecting 5 random students to show up to fill out a survey. What is the probability that the first student who arrives is a female? The answer here is clear, it is 60%. Now, the more difficult question is what is the probability that the second student to enter the room is female? This time the answer is a bit more nuanced. In a theoretical sense it is still 60% if we somehow had no knowledge about the first student. However, if we know if the first student was female or not, then the probability of the second student being female changes. This is not always intuitive for people, so we illustrate this with a little “example” with smaller numbers.

Example 9.2 Suppose there is a party and there are 4 cupcakes on a table, 2 of which are chocolate and the other 2 are strawberry. If you were to be given a cupcake at random, it is clear that \(P(\text{Chocolate}) = \frac{1}{2}\). But, what if you weren’t the first person to receive a cupcake? Whichever type of cupcake that is given out first will greatly impact your chances of receiving a chocolate cupcake. We would get the following probabilities.

\[\begin{align*} \text{First Cupcake is Chocolate} &\rightarrow P(\text{Chocolate}) = \frac{1}{3} \approx 33.33\%\\ \text{First Cupcake is Strawberry} &\rightarrow P(\text{Chocolate}) = \frac{2}{3} \approx 66.67\% \end{align*}\]

However, now imagine you are at a factory where there are many cupcakes of each variety. Let’s say, for discussion purposes, that there are 1,000 of each type, chocolate and strawberry.. If you were to get the first cupcake, we get that \(P(\text{Chocolate}) = \frac{1000}{2000} = \frac{1}{2}\). However, if we were to get the second cupcake, we get the following probabilities:

\[\begin{align*} \text{First Cupcake is Chocolate} &\rightarrow P(\text{Chocolate}) = \frac{999}{1999} \approx 49.975\%\\ \text{First Cupcake is Strawberry} &\rightarrow P(\text{Chocolate}) = \frac{1000}{1999} \approx 50.025\% \end{align*}\] Thus, the probabilities have technically changed, the change is very minimal. The probability of getting a chocolate cupcake (at random) has changed in roughly 1 part in 4000 if we get the second cupcake handed out rather than the first.

The difference between the scenarios in the above example is clear. When we sampled 2 cupcakes out of 4, the selection of the first cupcake dramatically impacted the probability of the second cupcake. However, when we sampled 2 cupcakes out of 2000, the probabilities were unchanged up to 3 digits! This leads to the following definition.

Definition 9.4 When \(n\) is significantly small enough compared to \(N\), so that the individuals can be viewed as independent, we will write \(n << N\). This is always true when \(N = \infty\) and we will take \(n << N\) to mean that \(\frac{n}{N} < 0.05\) to avoid the need for any finite population correction.

That is, we will write \(n << N\) whenever the sample size, \(n\), is less than five percent of the population size, \(N\). We call this the $5% Condition.

The choice of 5% in Definition 9.4 is a bit arbitrary. This seems to be fairly standard in the literature, but in essence, some sort of finite population correction is necessary whenever \(N\) is finite. However, we will adopt this convention to allow us to utilize simpler techniques at the beginning of our statistical journey.

If we only sample a small portion of a population, we can assume that each choice is independent. Sampling a large portion of a population is possible, but requires more care and other concepts not explained here on Statypus.

One key tool we will use to understand the distribution of sample proportions is a theorem called the Central Limit Theorem. There are different versions of this theorem, but the version of interest to us is given below.

Theorem 9.1 (Central Limit Theorem (CLT)) Given a distribution or random variable, \(X\), with a population mean of \(\mu_X\) and a population standard deviation of \(\sigma_X\), then the collection of samples of size \(n\) gives rise to a distribution of sample means, \(\bar{x}\), which have mean and standard deviation given below. \[\begin{align*} \mu_{\bar{x}} & = \mu_X\\ \sigma_{\bar{x}} & = \frac{\sigma_X}{\sqrt{n}} \end{align*}\]

If the original distribution was Normal, then the distribution of sample means is also Normal.

However, the distribution approaches Norm\((\mu_{\bar{x}}, \sigma_{\bar{x}})\) as \(n\) gets large regardless of the shape of the original distribution.

A fun classroom demonstration of the Central Limit Theorem involves using M&M candies. The teacher instructions can be found here, the required Google sheet can be found here, and an R script to handle the random numbers involved can be found here.

Otherwise, this Desmos Exploration can be helpful understanding both the CLT and LLN.

Feel free to click on the Desmos logo in the lower right corner to open the graph in Desmos and allow more advanced options. Discuss the implications with your instructor if you have any questions.

Thus, in order to find \(\mu_p\) and \(\sigma_p\), we simply need to realize \(p\) as the average of a distribution we can work with. This isn’t too hard. We let \(X\) be a discrete random variable such that \(P( X = 1 ) = p\) for some \(p>0\) and also require \(P ( X = 0 ) = 1-p\) so that the sample space of \(X\) is \(\{ 0,1 \}\). If we consider \(X=1\) to be a success, we get that an average of a sample of \(n\) values from \(X\) is exactly a value of \(\hat{p}\) we are after. We verify this with a short little example.

Example 9.3 We consider a coin and imagine flipping it 6 times. We can simulate this using the following code.

sample( x = c("Heads", "Tails"), size = 6, replace = TRUE )## [1] "Tails" "Tails" "Tails" "Tails" "Heads" "Heads"If we consider the result of “Heads” to be a success, we get that \(\hat{p} = \frac{2}{6}\) or \(\frac{1}{3}\). If we assign the results of the statistical experiment as below:

\[\begin{align*} \text{Heads} &\rightarrow 1\\ \text{Tails} &\rightarrow 0 \end{align*}\]

Then we get that our sample is equivalent to the vector \({\bf x} = ( 0, 0, 0,0,1,1)\) which immediately gives that \(\bar{x} = \frac{1}{3}\) as well. Thus, \(\bar{x} = \hat{p}\) as claimed.

Thus, we just need to find \(\mu_X\) and \(\sigma_X\) for a generic value of \(p\) and invoke the Central Limit Theorem. Using Definition 6.5, we can do this pretty easily and we summarize the results in the following theorem.

Theorem 9.2 Given a discrete random variable such that \(P( X = 1 ) = p>0\) and \(P ( X = 0 ) = 1-p\), we get that

\[\mu_X = p\] and \[\sigma_X = \sqrt{p \cdot (1-p)}.\]

The proof of Theorem 9.2 can be found in this chapters appendix, Section 9.7.2.1.

With this now known, it is simply a matter to realize that for our particular choice of \(X\) that we have \(\bar{x} = \hat{p}\) as both samples give the proportion of values that were “successes.”

This is exactly a Binomial experiment with \(n\) trials. Using the Normal approximation to the Binomial distribution, as setup in Theorem 8.2, we have the following formulas for the distribution of \(X\) successes out of \(n\) trials.123

\[\begin{align*} \mu_{\scriptscriptstyle X} & = n \cdot p\\ \sigma_{\scriptscriptstyle X} & = \sqrt{n \cdot p \cdot (1-p)} \end{align*}\]

If we now use the relationship that

\[\hat{p} = \frac{X}{n},\] we can quickly derive124 formulas for \(\mu_{\hat{p}}\) and \(\sigma_{\hat{p}}\).

\[\begin{align*} \mu_{\hat{p}} & = \mu_{\scriptscriptstyle \frac{X}{n}} = \frac{\mu_{\scriptscriptstyle X}}{n} = \frac{n \cdot p}{n} = p\\ \sigma_{\hat{p}} & = \sigma_{\frac{X}{n}} =\frac{\sigma_{\scriptscriptstyle X}}{n} = \frac{\sqrt{n \cdot p \cdot (1-p)}}{n} = \sqrt{\frac{p \cdot (1-p)}{n}} \end{align*}\]

The requirement that \(n << N\) will allow us to pull actual samples (without replacement) from a population without affecting the “new” probability of “success” after some individuals are removed. In addition, we will require that we can expect at least 10 successes and 10 failures or that both \(n \cdot p\) and \(n \cdot (1-p)\) are both at least 10 so that \(n\) is large enough to assume the distribution of \(\bar{x} = \hat{p}\) is approximately Normal.125

Theorem 9.3 The distribution of sample proportions, that is the collection of all possible values of \(\hat{p}\) from samples of size \(n\), from a population with a population proportion of \(p\) is approximately Normal with the following parameters.

\[\begin{align*} \mu_{\hat{p}} & = p\\ \sigma_{\hat{p}} & = \sqrt{ \frac{ p \cdot (1-p)}{n} } \end{align*}\]

assuming that \(n\cdot p \geq 10\), \(n \cdot (1-p) \geq 10\), and that \(n << N\).

The inequalities \(n\cdot p \geq 10\) and \(n \cdot (1-p) \geq 10\) are what we will collectively call the Success/Failure Condition.

The following exploration shows the relationship between random samples drawn with the given population proportion and sample size and the theoretical model shown above.

We begin by noting that if we alter the population proportion with the purple slider without changing the sample size that the background changes to a red color and a warning appears indicating that we have violated the conditions of Theorem 9.3. Feel free to toggle the “Normal Model” slider and see if you agree that we shouldn’t trust the model when the conditions are not met. Setting \(p\) to an extreme value near 0 or 1 should show that the model does break down.

Set the sample size to 40 and the population proportion to a value around 0.7. Toggle the “Normal Model” slider to “Yes” and you should see that the histogram of random samples appears to fit the model predicted by Theorem 9.3. If you reduce the number of samples using the black vertical “# of Samples” slider on the right hand side, you should see that the agreement between the histogram and the model becomes weaker. Dragging the “Randomizer” slider will change the random samples that are shown in the histogram. Conversely, increasing the number of samples will increase the agreement between the histogram and the model. This illustrates the concept contained in the Law of Large Numbers, Theorem 6.1.

Further investigation can be done using these tools as well as the tools opened by toggling the “Probability” slider. However, we will not go further here. Reach out to your instructor or the author of Statypus if you have questions on what else you can do with this exploration.

9.1.4 Applications to the Distribution of Sample Proportions

Since there is no new functions being used in this section, we encourage the reader to use the code chunks in the following examples as their template code for problems of this type.

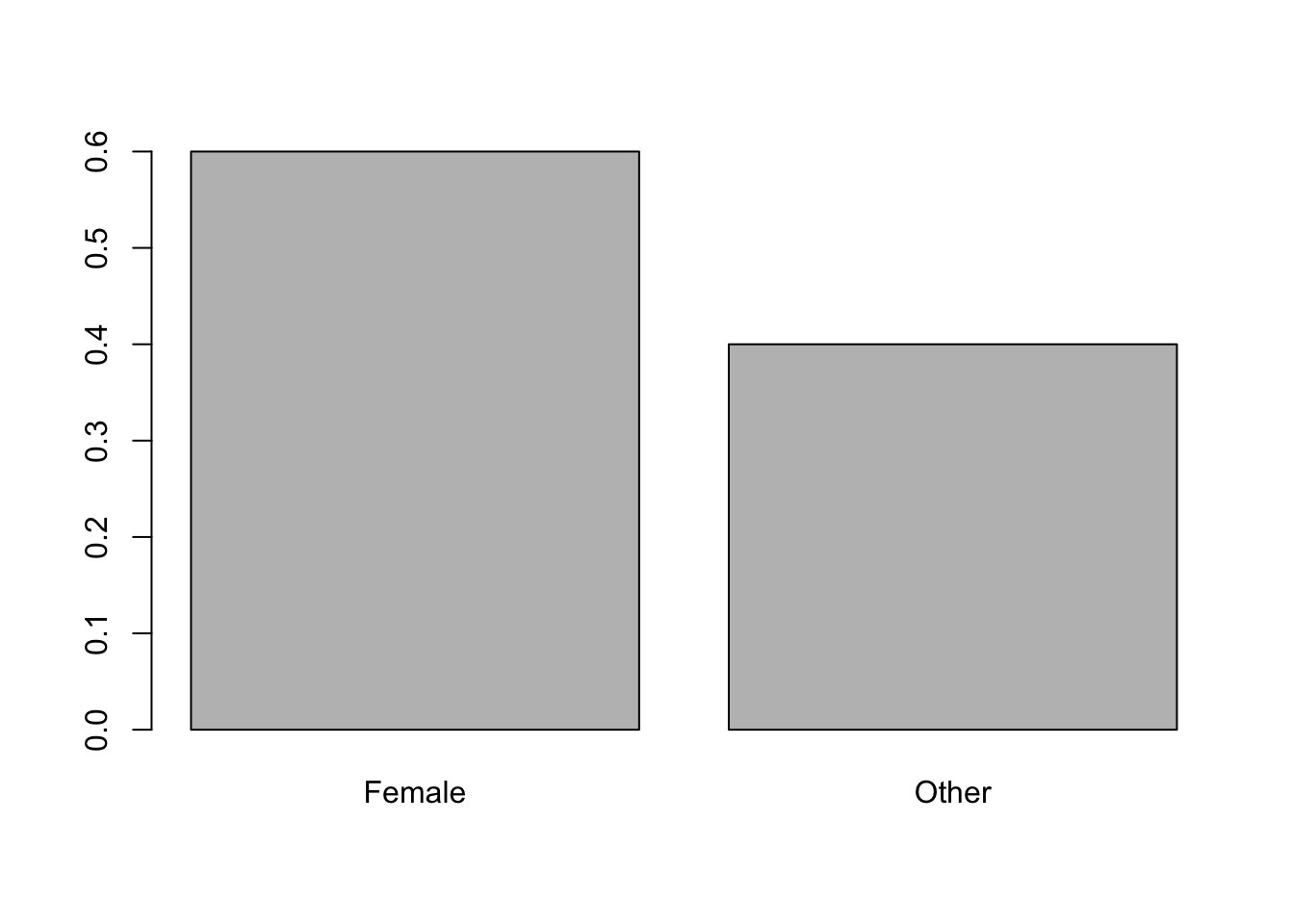

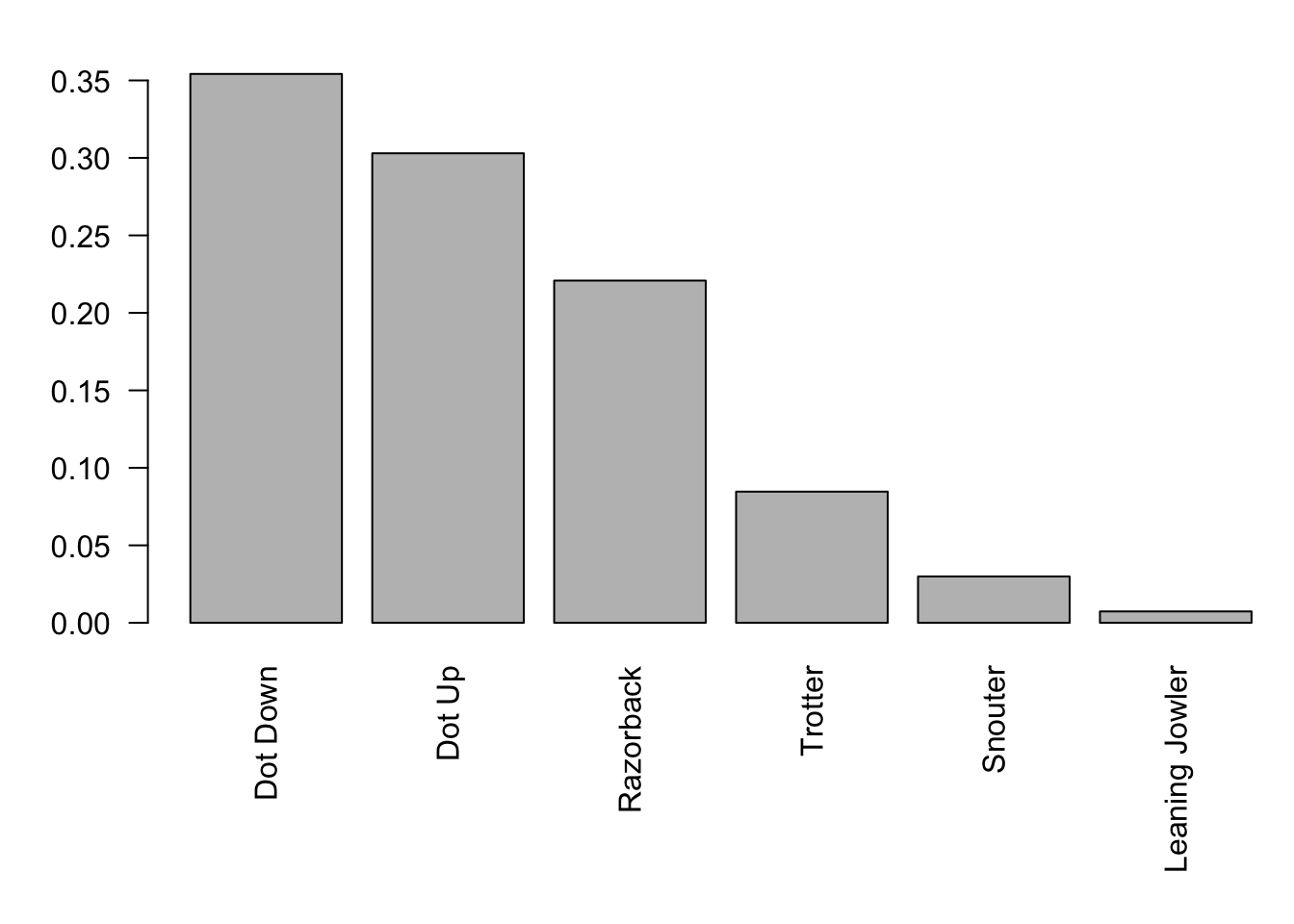

We return to the setup we had in the beginning of this section where we had a population which was 60% female. At this point, we will not assume that \(N = 10000\), but comment on the requisite size of \(N\) as we go along. So, we have that \(p = 0.6\) and our initial population of individual people looks like the following histogram.

Figure 9.3: Relative Frequency Bar Plot of University Genders

Now, if we randomly select 100 people from the population, think of a small to moderate lecture hall, we expect to have 60 females and 40 non-females. Moreover, if the proportion of females was important to a survey we are hoping to give, then we would not want the number of females to be too far from 60.126

Example 9.4 Q: What is the probability that a simple random sample of 100 individuals from the population discussed above would contain no more than a third of females?

We can answer this by realizing that all SRSs of size \(n=100\) would meet our criteria for a Normal distribution as long as the population size is large enough. We know that \(N = 10000\) so that we have that \(n << N\). We can now assume that the distribution of values of \(\hat{p}\) is approximately Normal with

\[\begin{align*} \mu_{\hat{p}} & = p = 0.6\\ \sigma_{\hat{p}} & = \sqrt{ \frac{ p \cdot (1-p)}{n} } = \sqrt{ \frac{ 0.6 \cdot (1- 0.6)}{100}} \approx 0.049 \end{align*}\]

We can now answer our question about an unlikely SRS having no more than a third of females with pnorm using the following code chunk.

#Sample Size

n = 100

#Sample proportion to be tested

pHat = 1/3

#Population proportion

p = 0.6

#Only change the value of `lower.tail` below and also the values above.

pnorm( q = pHat, mean = p, sd = sqrt( p * ( 1 - p ) / n ), lower.tail = TRUE )## [1] 2.614968e-08That is to say that we should expect a sample that under-represents females at least this much once in every 382 million (the reciprocal of the above output of pnorm) SRSs of size \(n = 100\).

Example 9.5 Q: Do you think it would be more or less likely to have a smaller sample have no more than one third females?

Being that we need \(n \cdot p \geq 10\) and \(n \cdot (1-p) \geq 10\), we get that we need \(n \geq 25\).127 We can set the sample size to \(n = 25\) to make sure the sample is not excessively small and run the above code with this new value.

#Sample Size

n = 25

#Sample proportion to be tested

pHat = 1/3

#Population proportion

p = 0.6

#Only change the value of `lower.tail` below and also the values above.

pnorm( q = pHat, mean = p, sd = sqrt( p * ( 1 - p ) / n ), lower.tail = TRUE )## [1] 0.003247793This says we can now expect such “unlucky” samples once in roughly every 308 SRSs of size \(n = 25\). Compared to samples of size \(n=100\), this is over 1 million times more likely!

Example 9.5 shows that \(\hat{p}\) deviating a certain distance from \(p\) is less likely as we increase \(n\). This is exactly what the Law of Large Numbers, Theorem 6.1, told us would happen.

In Chapter 8, we discussed Steph Curry’s all time record of 91.0% free throw percentage. Let’s assume that this is the value of \(p\) of the proportion of free throws that Steph Curry will make.

What is the minimal sample size, \(n\), in order to believe the set of \(\hat{p}\)s of size \(n\) is Normally distributed?

Find \(\mu_{\hat{p}}\) and \(\sigma_{\hat{p}}\) for samples of size \(n=100\) of Steph Curry free throws.

If we use the values found in question 2 above and assume we can treat the distribution of \(\hat{p}\) values are Normally distributed, redo Exercise 8.2 part c. That is, find \(P( \hat{p} > 0.95)\).

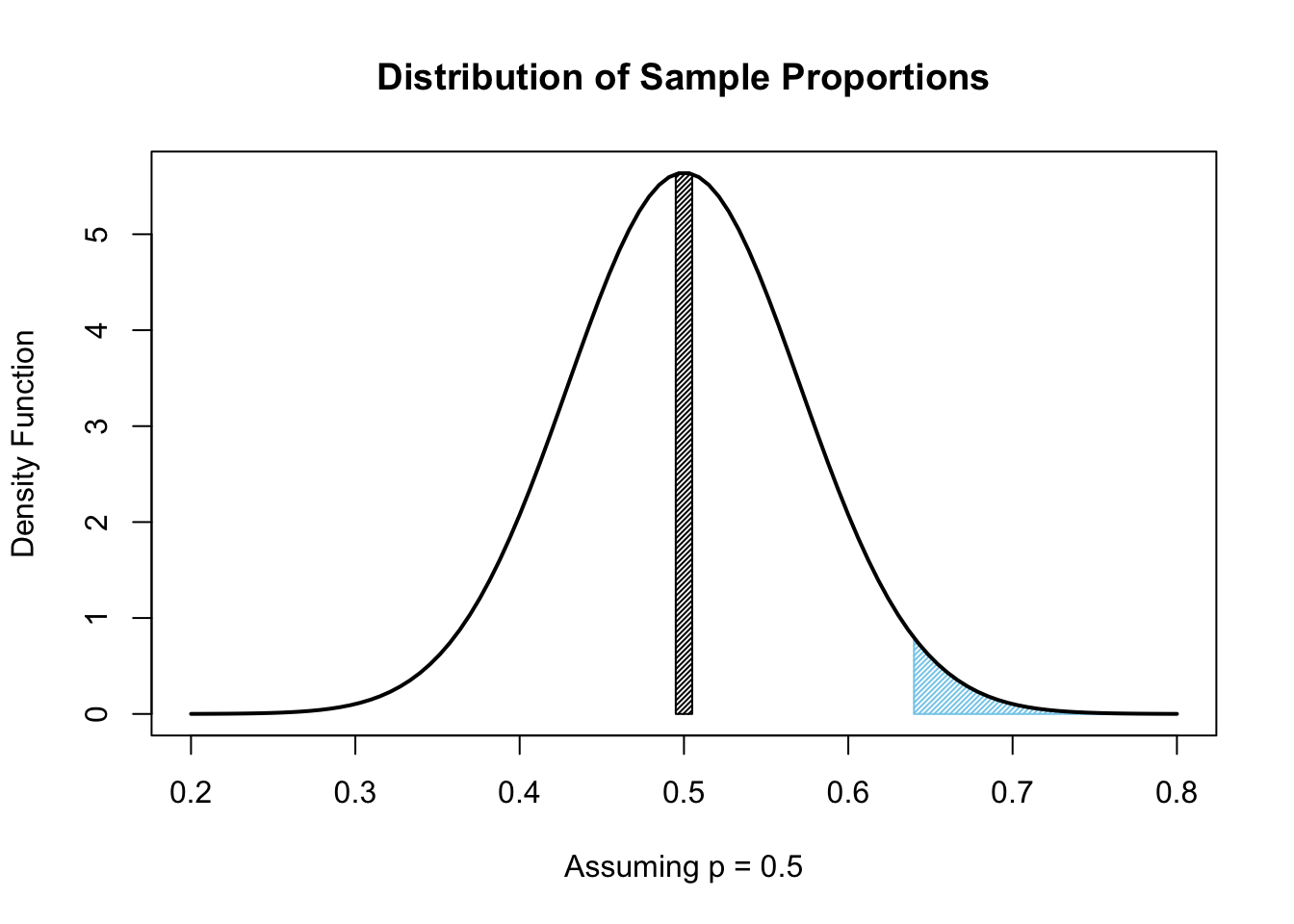

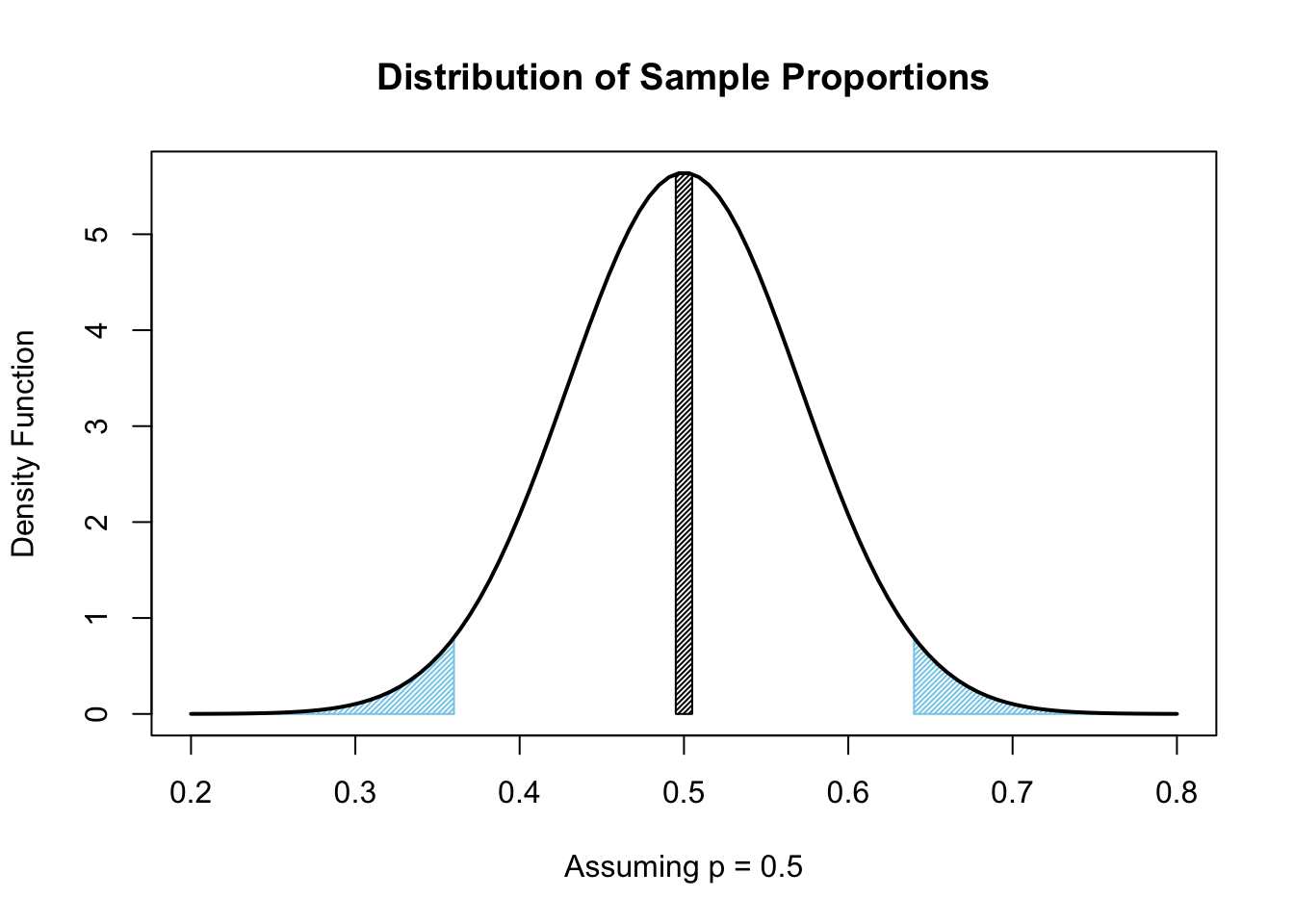

Example 9.6 (Melody Returns) We can use our new tool to re-analyze the saga of Melody’s shoes which was introduced in Section 8.4. We use the same code as above and use \(p = 0.5\) to assume that, at worst, we expect Melody to have a random chance of success equal to that of a Blind Ape in a Dark Room (BADR). We find the probability that a random sample of size \(n = 40\) would give a value of \(\hat{p}\) no higher than the observed value of \(\frac{13}{40}\).

#Sample Size

n = 40

#Sample proportion to be tested

pHat = 13 / 40

#Population proportion

p = 0.5

#Only change the value of `lower.tail` below and also the values above.

pnorm( q = pHat, mean = p, sd = sqrt( p * ( 1 - p ) / n ), lower.tail = TRUE )## [1] 0.01342835This says that we expect a BADR to achieve a sample proportion no better than Melody only 1.34% of the time. This value is less than the one found in Section 8.4, but is very comparable. The main discrepancy is the use of the CLT as compared to using Binomial tools directly. We will see this type of discrepancy later when we use the function binom.test in Section 9.5.

Review for Chapter 9 Part 1

Inference for Population Proportion(s) Quiz: Part 1

This quiz tests your understanding of the Distribution of Sample Proportions (\(\hat{p}\)), which is the foundational concept in Part 1 of Chapter 9. The answers can be found prior to the Exercises section.

- True or False: The population proportion is a statistic derived from a sample, and the sample proportion is a parameter describing the population.

- True or False: The formula for the sample proportion is \(\hat{p} = \frac{x}{n}\), where \(x\) is the number of individuals with the desired trait and \(n\) is the sample size.

- True or False: The sample proportion, \(\hat{p}\), is considered the point estimate of the population proportion, \(p\).

- True or False: For the distribution of sample proportions, \(\hat{p}\), to be viewed as approximately Normal, the number of expected successes, \(n \cdot p\), and expected failures, \(n \cdot (1-p)\), must both be less than 10.

- True or False: The mean of the distribution of sample proportions, denoted \(\mu_{\hat{p}}\), is equal to the population proportion, \(p\).

- True or False: The population proportion is denoted as \(p\) instead of the Greek letter \(\pi\) to avoid confusion with the constant ratio of a circle’s diameter to its radius (\(\approx 3.14159\)).

- True or False: To avoid the need for any finite population correction, the condition \(n << N\) means the sample size, \(n\), must be less than five percent of the population size, \(N\).

- True or False: The distribution of sample proportions (\(\hat{p}\)) can be approximated by a Normal distribution when the sample size is sufficiently large, which is an application of the Central Limit Theorem (CLT).

- True or False: As the sample size, \(n\), increases, the likelihood of the sample proportion, \(\hat{p}\), deviating a certain distance from the population proportion, \(p\), increases.

- True or False: The standard deviation of the distribution of sample proportions is given by the formula \(\sigma_{\hat{p}} = \sqrt{\frac{p \cdot (1-p)}{n}}\).

Definitions

Section 9.1.1

Definition 9.1 \(\;\) If we consider a population of size \(N\)128 where a proportion of individuals have a certain trait, this is a parameter known as the population proportion and is denoted as \(p\).

Definition 9.2 \(\;\) If we take a sample of size \(n\) and count the number of individuals, which we will call \(x\), which have the desired trait, then we can define the sample proportion to be

\[\hat{p} = \frac{x}{n}.\]

Section 9.1.2

Definition 9.3 \(\;\) We summarize the notation we have at this point.

- Parameter: A numerical value that describes some characteristic of a population.

- Census: The process of collecting information from an entire population.

- \(N\): The population size. [Note: May be infinite.]

- \(p\): The population proportion.

- Statistic: A numerical value that describes some characteristic of a sample.

- Survey: The process of collecting information from the sample of a population.

- \(n\): The sample size.

- \(x\): The number of individuals in a sample with the desired trait.

- \(\hat{p}\): The sample proportion, our point estimate of \(p\).

- Point estimate: A single value derived from statistics used to estimate a parameter.

Section 9.1.3

Definition 9.4 \(\;\) When \(n\) is significantly small enough compared to \(N\), so that the individuals can be viewed as independent, we will write \(n << N\). This is always true when \(N = \infty\) and we will take \(n << N\) to mean that \(\frac{n}{N} < 0.05\) to avoid the need for any finite population correction.

That is, we will write \(n << N\) whenever the sample size, \(n\), is less than five percent of the population size, \(N\). We call this the $5% Condition.

Results

Section 9.1.3

Theorem 9.1 (Central Limit Theorem (CLT)) \(\;\) Given a distribution or random variable, \(X\), with a population mean of \(\mu_X\) and a population standard deviation of \(\sigma_X\), then the collection of samples of size \(n\) gives rise to a distribution of sample means, \(\bar{x}\), which have mean and standard deviation given below. \[\begin{align*} \mu_{\bar{x}} & = \mu_X\\ \sigma_{\bar{x}} & = \frac{\sigma_X}{\sqrt{n}} \end{align*}\]

If the original distribution was Normal, then the distribution of sample means is also Normal.

However, the distribution approaches Norm\((\mu_{\bar{x}}, \sigma_{\bar{x}})\) as \(n\) gets large regardless of the shape of the original distribution.

Theorem 9.2 \(\;\) Given a discrete random variable such that \(P( X = 1 ) = p>0\) and \(P ( X = 0 ) = 1-p\), we get that

\[\mu_X = p\] and \[\sigma_X = \sqrt{p \cdot (1-p)}.\]

Theorem 9.3 \(\;\) The distribution of sample proportions, that is the collection of all possible values of \(\hat{p}\) from samples of size \(n\), from a population with a population proportion of \(p\) is approximately Normal with the following parameters.

\[\begin{align*} \mu_{\hat{p}} & = p\\ \sigma_{\hat{p}} & = \sqrt{ \frac{ p \cdot (1-p)}{n} } \end{align*}\]

assuming that \(n\cdot p \geq 10\), \(n \cdot (1-p) \geq 10\), and that \(n << N\).

Big Ideas

Section 9.1.3

If we only sample a small portion of a population, we can assume that each choice is independent. Sampling a large portion of a population is possible, but requires more care and other concepts not explained here on Statypus.

Important Remarks

Section 9.1.3

Some readers may be wondering about why we choose to use \(p\) when Greek letters have been our mainstay for parameters. There is a Greek letter that is often used for the population proportion, but it is \(\pi\). It was decided that the pattern of the use of Greek letters for parameters was not as important as avoiding the almost certain confusion between \(\pi\) being the population proportion (and thus necessarily between 0 and 1) and the constant ratio of a circle’s diameter to its radius (\(\approx 3.14159\)).

In addition, there is a parameter that we did not introduce in Chapter 5. We defined \(r\) to be the correlation coefficient between two sets of data. However, more formally, \(r\) is the sample correlation coefficient, and thus a statistic. \(r\) is a point estimate of the population correlation coefficient which is traditionally denoted by the Greek letter \(\rho\), pronounced the same as “row.” That is \[r \approx \rho.\] This is explored in Chapter 12 when we do inferential statistics on the least squares regression line.

The inequalities \(n\cdot p \geq 10\) and \(n \cdot (1-p) \geq 10\) are what we will collectively call the Success/Failure Condition.

Section 9.1.4

The choice of 5% in Definition 9.4 is a bit arbitrary. This seems to be fairly standard in the literature, but in essence, some sort of finite population correction is necessary whenever \(N\) is finite. However, we will adopt this convention to allow us to utilize simpler techniques at the beginning of our statistical journey.

Quiz Answers

- False (The population proportion (\(p\)) is a parameter describing the population, and the sample proportion (\(\hat{p}\)) is a statistic derived from the sample.)

- True

- True

- False (The Success/Failure Condition requires that both \(n \cdot p\) and \(n \cdot (1-p)\) be greater than or equal to 10 for the distribution to be approximately Normal.)

- True

- True

- True

- True

- False (As \(n\) increases, the standard deviation (\(\sigma_{\hat{p}}\)) decreases, meaning the likelihood of the sample proportion deviating significantly from \(p\) decreases.)

- True

Exercises

Exercise 9.1 In Example 8.5 of Section 8.3, we talked about the probability that a random screw is accurate enough to be usable for a toy plane is 99%.

Treating this as \(p\), what is the minimum sample size to assume the distribution of \(\hat{p}\)s are Normally distributed according to Theorem 9.3?

If we have samples of size \(n=1000\), find \(\mu_{\hat{p}}\) and \(\sigma_{\hat{p}}\).

Using the values found in part b. above, find the probability that a random sample of size \(n=1000\) gives a \(\hat{p}\) that is less than 98%.

Exercise 9.2 A survey company is interested in the proportion of U.S. adults who own a smart watch. They estimate this proportion to be \(p = 0.35\). If they take a random sample of \(n = 50\) adults:

Calculate the mean and standard deviation of the sampling distribution of \(\hat{p}\).

Check the Success/Failure Condition for using the Normal model to describe the sampling distribution.

Based on your check, would it be appropriate to use the Normal model for this sample size? If not, what sample size would you recommend to meet the condition?

Exercise 9.3 The FDA claims that only \(p = 0.04\) of all packaged foods contain an undeclared allergen. A food safety group plans to test \(n = 300\) randomly selected packages.

Find the mean and standard deviation of the sampling distribution of the sample proportion, \(\hat{p}\).

Check the Success/Failure Condition.

Would the Normal model be appropriate for modeling the sampling distribution of \(\hat{p}\)? Explain your reasoning and state which condition, if any, is violated.

Exercise 9.4 Assume that \(40\%\) of all undergraduate students at a university use a study app on their phone. A large introductory statistics class takes a random sample of \(n=150\) students.

Verify that the conditions for using a Normal model for the sampling distribution of \(\hat{p}\) are met.

Calculate the two-standard deviation interval for the sample proportion (\(\hat{p}\)).

Give a practical interpretation of the interval you calculated in part b.

Exercise 9.5 Suppose a poll of \(n=1000\) voters indicates that \(48\%\) favor Candidate A, but the true population proportion is \(p=0.50\).

Calculate the standard deviation of the sampling distribution, \(\text{SD}(\hat{p})\).

Use the appropriate R function to find the probability that a sample proportion \(\hat{p}\) from a sample of this size is \(0.48\) or less, assuming the true proportion is \(p=0.50\).

Explain how this probability relates to the Central Limit Theorem.

Exercise 9.6 A medical researcher is designing a study and needs the standard deviation of the sample proportion, \(\text{SD}(\hat{p})\), to be no more than \(0.02\). They are planning to study a condition that they estimate affects about \(25\%\) of the population (\(p \approx 0.25\)).

Determine the minimum required sample size (\(n\)) for the standard deviation of the sample proportion to be \(\text{SD}(\hat{p}) = 0.02\).

If the researcher wanted the standard deviation to be \(0.01\) (half the size), how would the required sample size change?

Exercise 9.7 Let’s revisit Exercise 8.3.

Which parts lead to sampling distributions that can be treated as being approximately Normal according to Theorem 9.3?

For all parts of Exercise 8.3 where the sampling distribution can be assumed to be approximately Normal, find \(\mu_{\hat{p}}\) and \(\sigma_{\hat{p}}\).

For all parts of Exercise 8.3 where the sampling distribution can be assumed to be approximately Normal, redo those parts using

pnorm.

9.2 Part 2: Confidence Intervals for Population Proportion

We know that the sample proportion, \(\hat{p}\), is our point estimate of the population proportion, \(p\). However, being that for any given value of the sample size, \(n\), there are only \(n+1\) possible values of \(\hat{p}\), and that \(p\) can be any of the infinite values between 0 and 1, we are quite certain that the probability that a given sample gives the exact value of \(p\) is very small (typically 0).

We want to make as confident of a prediction as we can about \(p\) based on a particular value of \(\hat{p}\).

9.2.1 Confidence vs. Margin of Error vs. Precision

There is a sort of tug of war between the desire for a estimation to be precise and our confidence in that estimate. In a perfect world, it would be ideal to both be extremely confident about an estimate and have a very precise estimate, however, this is not possible for any given sample.

](images/Dartboard.jpg)

Figure 9.4: Bullseye of a Dartboard, Photo by Martin Vorel

To illuminate the idea between precision and confidence, let’s consider a simple carnival game of trying to hit a bullseye with a dart from a certain distance. There seems to be a give and take between the precision of the shot needed and the confidence of the thrower. If we think of the radius of the bullseye as the margin or error granted the thrower, then it is clear that as we increase the margin of error, i.e. a larger target, that the confidence of the thrower should increase.

To make this more explicit, imagine you are standing at a standard dart board from any length. The inner or double bullseye has a radius, or margin of error, of only 0.25 inches. Try to mentally guess your probability of hitting this small target if you were given three attempts. Would you be willing to wager $1 that you could do it?

Now imagine that the game is simply whether you can hit the scoring region of the dartboard as a whole from the same distance as with the double bullseye. This has a radius, or margin of error, of just under 9 inches. Would you be willing to make the $1 wager now?

Regardless of your confidence at hitting the double bullseye, it should be true that you had more confidence at hitting the scoring region of the board. You still may not want to take the bet, but you should have had more confidence in hitting the larger target.

This example showed that as we increased the margin of error, we also increased the confidence in hitting that target. The reverse is also true, the only way to increase the confidence of a given thrower is to increase the margin of error. We write this symbolically below.

\[(\text{Confidence} \uparrow) \Leftrightarrow (\text{Margin of Error} \uparrow)\] Remembering that the target we are interested in is \(p\), we can see that the margin of error is actually a measure of how precise the estimate is, but it is clear that a large margin of error would mean a low level of precision. That is, we have the following.

\[ (\text{Margin of Error} \uparrow)\Leftrightarrow (\text{Precision} \downarrow)\] Putting this together, we get the following.

For any given sample, we get that \[(\text{Confidence} \uparrow) \Leftrightarrow (\text{Margin of Error} \uparrow) \Leftrightarrow (\text{Precision} \downarrow)\]

or logically equivalently that

\[(\text{Confidence} \downarrow) \Leftrightarrow (\text{Margin of Error} \downarrow) \Leftrightarrow (\text{Precision} \uparrow).\]

The last part says that if we want higher precision, we must have a smaller margin of error which would lower our confidence.

This exploration offers an interactive alternative to the darts thought example described above.

Begin by adjusting the green slider to a random spot to produce a random location within the white region. This becomes our target.

Memorize where the green dot is in the white field and toggle the black slider to “Guess” to hide the target and show the draggable red guess point.

Drag the red dot to the location you believe the green dot was prior to toggling the black slider.

How confident are you that the green dot is exactly aligned with the red dot? In other words, how often do you think you would win if you wagered $1 that the green dot is exactly aligned with the red dot?

- Raise the vertical red slider slightly and notice that a radius is added to the red dot offering a margin of error for our guess.

How confident are you that the green dot is within the shaded red region? It should be higher than it was before because we now have a margin of error.

- Adjust the vertical red slider and consider how your confidence is impacted by the size of the margin of error.

You should feel more confident with a higher margin of error and less confident with a smaller margin of error. This gives another way of seeing the relationship we stated in the Big Idea above. The only neglected aspect within this exploration is the realization that precision is inversely related to the margin of error. A more precise guess means a smaller margin of error and a less precision means we are allowed a larger margin of error.

9.2.2 Confidence Interval Code

From here on, we will denote the value of \(\hat{p}\) from a sample we are actually working with as \(\hat{p}_0\) (or pHat0 in R code) to distinguish it from the distribution of other possible values of \(\hat{p}\).

Our goal is to give a confidence interval for the population proportion \(p\). Our point estimate, \(\hat{p}_0\), is an unbiased estimator129, so we want an interval with bounds given by

\[\text{point estimate} \pm \text{Margin Of Error}\] or using the fact that we know \(\hat{p}_0\) is our point estimate, we get

\[\hat{p}_0 \pm \text{Margin Of Error}\]

This just leaves us to find a formula for the Margin Of Error, which we will abbreviate as \(\text{MOE}\).

Let’s pretend that we are working with an initial population that has a proportion of \(p = 0.6.\) We will call this p0 in the code to align with the notation used in Section 9.3 dealing with hypothesis testing.

The code chunk before defines the characteristics of the sample we wish to examine. We treat p0 as a “secret” where we tell R the value, but we will not use it in the derivation of the confidence interval.

#Known population proportion (a "secret")

p0 = 0.6

#Sample size

n = 100

#Confidence Level

C.Level = 0.95 We can now use rbinom130 to generate a random number of successes pulled from a population where \({p = 0.6.}\) This is the only time we actually use the value of p0 = 0.6 and hereafter we will treat it as an unknown until we come to the point where we check if \(p\) is indeed within our confidence interval.

x <- rbinom(1,n,p0) #random binomial sample with given arguments

x## [1] 54Thus, for the discussion of this example, we will be working with a sample that had 54 successes out of 100. From this, we can begin working on developing confidence intervals. We first calculate \(\hat{p}_0\) (our observed value of \(\hat{p}\)), denoted pHat0 here.

pHat0 <- x / n #Sample proportion

pHat0## [1] 0.54We would like to be able to calculate the standard deviation of the sample proportions, \(\sigma_{\hat{p}}\), but this is likely impossible. Instead, we introduce the concept of the Standard Error attributed to a sample.

Definition 9.5 Given a sample with a sample proportion of \(\hat{p}_0\) from a population being measured for a certain trait, we say that the Standard Error of The Distribution of Sample Proportions or simply the Standard Error attributed to the sample proportion \(\hat{p}_0\) is the value

\[\text{SE} = \sqrt{\frac{\hat{p}_0\cdot( 1-\hat{p}_0)}{n}}\] which is an approximation to the (likely) unknown value of

\[\sigma_{\hat{p}} = \sqrt{\frac{ p \cdot( 1-p)}{n}}.\] We will treat the standard error like a standard deviation because it is a point estimate of one.

A lot of sources define what we have labeled \(\sigma_{\hat{p}}\) to be the standard error and then treat the value of \(\text{SE}\) as just an approximation to that. While there is nothing wrong with this viewpoint, here at Statypus we will call the value \(\sigma_{\hat{p}}\) the Standard Deviation of The Distribution of Sample Proportions and view it as a parameter where as we will call the value \(\text{SE}\) the Standard Error of The Distribution of Sample Proportions and view it as a parameter.

That is to say we will use the approximation

\[\text{SE} \approx \sigma_{\hat{p}}\] and maintain the convention of using Latin letters (\(\text{SE}\) in this case) to be the estimates of Greek letters (\(\sigma_{\hat{p}}\) in this case). At this point, we have the following pairs of statistics and parameters:

\[\begin{align*} \textbf{S}\text{ample} &\approx \textbf{P}\text{opulation}\\ \textbf{S}\text{tatistic} &\approx \textbf{P}\text{arameter}\\ \textbf{S}\text{ort of } &\approx \textbf{P}\text{erfect}\\ \bar{x} &\approx \mu\\ s &\approx \sigma\\ \text{SE} &\approx \sigma_{\hat{p}} \end{align*}\]

We can now calculate the standard error based on our samples value of \(\hat{p}_0\).

SE <- sqrt(pHat0*(1-pHat0)/n) #Standard Error

SE## [1] 0.04983974We know that 95% of values of \(\hat{p}\) lie within roughly 2 standard deviations (or standard errors in this case) from \(p\). That is equivalent to saying that 95% of samples will give a \(\hat{p}\) that is within approximately 2 standard errors of \(p\). That is, if we set

\[\text{MOE} \approx 2 \cdot \text{Standard Error}\] we are on the right track. This leads us to define a Confidence Level in general.

Definition 9.6 When discussing a confidence interval, the Confidence Level is a value between 0 and 1 that states how often the procedure used will give an interval that captures the parameter. I.e. how often the parameter falls between the values which define the confidence interval.

If we are discussing a 95% Confidence Interval, then we expect the procedure used will “work” for 95 samples out of 100, on average. That is to say we are making a claim of confidence about how often a sample will give a confidence interval that works and not about the individual confidence interval given from a specific sample.

We know the value of 2 above was just an approximation based on the Empirical rule. A better value can be found from qnorm.

Definition 9.7 Given a confidence level of \(C\), we define the Critical \(z\)-Value, which we denote \(z^*\) (which we assume to be positive), to be the value such that

\[P ( - z^* < z \leq z^*)=C.\] We may talk of either \(z^*\) or the pair \(\pm z^*\) as being the critical \(z\)-value(s).

The below calculates the critical \(z\)-values that give the middle 95% of a distribution where there is 2.5% of values in either “wing” of the distribution.131

#Critical z-score for this confidence level

zStar <- qnorm( 1 - (1 - C.Level) / 2 )

zStar## [1] 1.959964Note that we set C.Level to be 0.95 earlier and this output is the more precise version of “2.” We call this value \(z^*\) or zStar in R coding. The Margin Of Error, which we abbreviated \(\text{MOE}\), is the product of \(z^*\) and the standard deviation of \(\hat{p}\), or

\[\text{MOE} = z^* \cdot \sigma_{\hat{p}}.\]

However, as we are unable to know \(\sigma_{\hat{p}}\) without knowing \(p\), we are forced to use our approximation of \(\sigma_{\hat{p}}\), the Standard Error. That is, we have the following.

Definition 9.8 We define the Margin of Error for a Confidence Interval for a Population Proportion to be given by \[\text{MOE} = z^* \cdot \sigma_{\hat{p}},\] but in practice we are forced to accept the approximation of

\[\text{MOE} \approx z^* \cdot \text{SE}.\]

We can now write the confidence interval as

\[\left( \hat{p}_0 \pm \text{MOE}\right) \approx \left( \hat{p}_0 \pm z^* \cdot \text{SE} \right) = \left(\hat{p}_0 \pm z^* \cdot \sqrt{\frac{\hat{p}_0\cdot( 1-\hat{p}_0)}{n}}\right).\]

This says that we expect that 95% of all samples pulled from a population with a proportion of p would create a confidence interval, using the above formula, that would contain p.

Using the calculations above, we can find these bounds with the following code.

CIL <- pHat0 - zStar*SE #Confidence Interval Lower Bound

CIU <- pHat0 + zStar*SE #Confidence Interval Upper Bound

c( CIL, CIU ) #Confidence Interval## [1] 0.4423159 0.6376841This gives a 95% confidence interval of

\[ \left(\hat{p}_0 \pm z^*\cdot SE\right) = \left( 0.54 \pm 1.959964 \cdot 0.04983974 \right) = \left( 0.44232, 0.63768\right).\]

Thus, our sample did give a confidence interval that contains \(p\) =p0 = 0.6. To be precise about what we mean about our confidence, 95% of all random samples will give a confidence interval that captures \(p\). So, if we view our sample as one random sample pulled from all possible samples, then we have a 95% chance that our sample does give a confidence interval that contains \(p\) and we indeed get that \(0.4423481 <p < 0.8376519\).

Theorem 9.4 Here we summarize our results and calculations for the confidence interval for a population proportion. If \(n \cdot \hat{p} \geq 10\), \(n \cdot ( 1 - \hat{p}) \geq 10\), and \(n << N\), then we can find a confidence interval as shown below.

\[\begin{align*} \text{Conf. Int.} &= \left(\text{Pt. Est.} \pm MOE\right)\\ &= \left( \hat{p}_0 \pm z^* \cdot SE \right)\\ & = \left(\hat{p}_0 \pm z^* \cdot \sqrt{\frac{\hat{p}_0(1-\hat{p}_0)}{n}}\right) \end{align*}\]

where

\[\begin{align*} \text{Pt. Est.} & = \text{A point estimate of the parameter.}\\ \hat{p} &= \text{Sample Proportion of Success}\\ \hat{p}_0 &= \text{Observed value of }\hat{p} = \frac{x}{n}\\ x &= \text{Number of Successes}\\ n &= \text{Sample Size}\\ N &= \text{Population Size}\\ MOE &= \text{Margin Of Error} = z^* \cdot SE\\ \text{C.Level} &= \text{Desired Confidence Level}\\ z^* & = \text{zStar} = \text{qnorm( 1 - (1 - C.Level) / 2 )}\\ SE &= \text{Standard Error} = \sqrt{\frac{\hat{p}_0 \cdot (1-\hat{p}_0)}{n}}. \end{align*}\]

The code to make the calculations in Theorem 9.4 is below.

#Number of "Good" cases: User must supply this value.

x =

#Sample Size: User must supply this value.

n =

#Confidence Level: User must supply this value.

C.Level =

#Do not change anything below this line

pHat0 <- x / n #Sample proportion

SE <- sqrt( pHat0 * (1 - pHat0) / n )

zStar <- qnorm( 1 - (1 - C.Level) / 2 )

MOE <- zStar * SE #Margin Of Error

CIL <- pHat0 - MOE #Confidence Interval Lower Bound

CIU <- pHat0 + MOE #Confidence Interval Upper Bound

c( CIL, CIU ) #Confidence IntervalIt is important to note that Example 9.7 technically does not meet the criteria of Theorem 9.4 as we only have 7 successes. However, we maintain this example as it attaches to the M&M Candies exploration mentioned in Section 9.1.3. It is recommended that the techniques of Section 9.5 are used when the sample size is low enough that we do not expect at least 10 successes and 10 failures.

Example 9.7 We can return to the setup in Example 9.1 and find a confidence interval for the population proportion of primary colored Milk Chocolate M&M Candies, \(p\). We had 7 primary colored M&M candies out of 16 in our Fun Size bag which served as our sample. We can input these numbers into our above code and construct a 95% confidence interval for \(p\).

#Number of "Good" cases

x = 7

#Sample Size

n = 16

#Confidence Level

C.Level = 0.95

#Only change values above this line

pHat0 <- x / n #Sample proportion

SE <- sqrt( pHat0 * (1 - pHat0) / n )

zStar <- qnorm( 1 - (1 - C.Level) / 2 )

MOE <- zStar * SE #Margin Of Error

CIL <- pHat0 - MOE #Confidence Interval Lower Bound

CIU <- pHat0 + MOE #Confidence Interval Upper Bound

c( CIL, CIU ) #Confidence Interval## [1] 0.1944261 0.6805739This means that we are 95% confident that between 19.4% and 68.1% of Milk Chocolate M&M candies are primary colored. This is a very wide interval (high margin of error or small level of precision), but that is easily explained by our very small sample size of \(n=16\).

9.2.3 Sample Size for Desired Confidence

We can also consider how sample size affects confidence intervals. Returning to our carnival game analogy at the beginning of Section 9.2, it seems clear that your confidence would be lower if you were given only a single dart versus the ability to throw multiple darts. Moreover, the more darts that you are allowed to throw, your chance of winning definitely increases if you only need to have one dart hit the given region. Sample size plays the same role as the number of darts we are allowed to throw. This means we have the following relationships where we imagine the Margin of Error (and thus the precision) as being fixed at one particular level, i.e. either the bullseye or the entire scoring region.

We can fix the Margin of Error (and thus the Precision) and get that the sample size moves in the same direction as the confidence. That is, we get that

\[(\text{Sample Size} \uparrow) \Leftrightarrow (\text{Confidence} \uparrow)\] and

\[(\text{Sample Size} \downarrow) \Leftrightarrow (\text{Confidence} \downarrow).\]

The above Big Idea means that we can adjust our sample size to get a desired confidence level for a desired margin of error. That is to say, if we want to know a population proportion to within five percentage points, for example, then we can do that for any selected confidence level as long as we are able to get a sample size which is sufficiently large.

To see if our intuition about the relationship between sample size and confidence is correct, we will find the sample size needed for a certain confidence level and margin of error. To do this we begin with the equation for the margin of error and solve for the value of \(n\).

\[\begin{align*} \text{MOE} &= z^* \sqrt{ \frac{ \hat{p}_0 \cdot (1- \hat{p}_0)}{n}}\\ \text{MOE}^2 &= \left( z^* \right)^2 \cdot \frac{ \hat{p}_0 \cdot (1- \hat{p}_0)}{n}\\ n &= \left( z^* \right)^2 \frac{ \hat{p}_0 \cdot (1- \hat{p}_0)}{\text{MOE}^2} \end{align*}\]

If you have a prior estimate \(\hat{p}_0\) of \(p\), you can try the formula above to find the minimum sample size, \(n\). However, it should be made clear that any inaccuracy in \(\hat{p}_0\) could cause \(n\) to be too low for the desired margin of error. We need to dive a bit deeper to ensure that \(n\) is indeed large enough regardless of prior knowledge (or lack thereof).

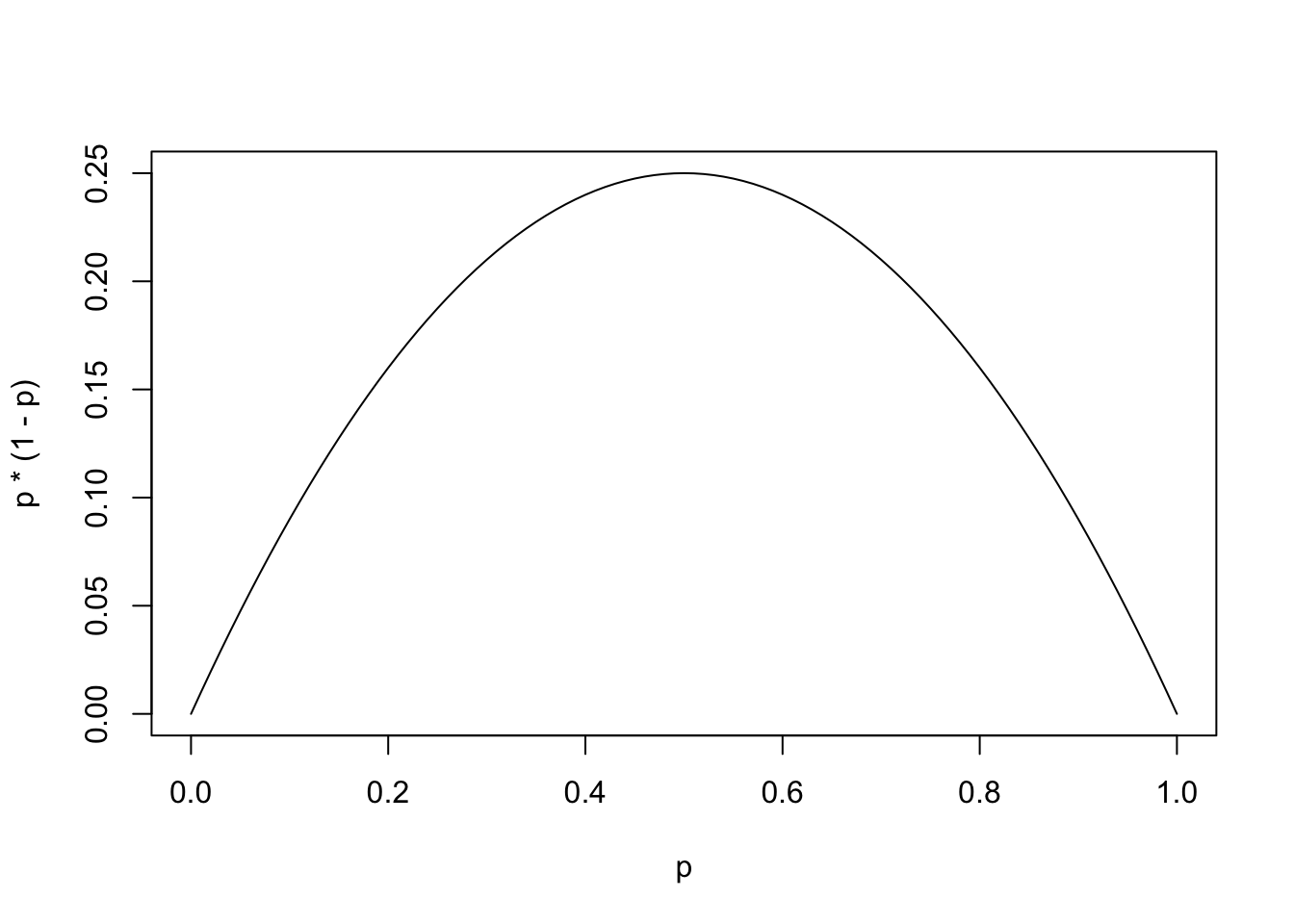

Being that we are trying to find the required sample size for a sample to calculate \(\hat{p}_0\), it is possible that we have no prior value of \(\hat{p}_0\) and thus need to understand how the term \(\hat{p}_0 (1 - \hat{p}_0)\) affects the formula we have for \(n\).

Figure 9.5: Plot of f(p) = p(1-p)

As this is the graph of \(f(p) = p (1-p) = p - p^2\) we see that it is a parabola and we know that the maximum value occurs midway between the two zeros of \(p = 0, 1\). Therefore, the function takes on a maximum value at \(p = \frac{1}{2}\) where \(f\left( \frac{1}{2} \right) = \frac{1}{4}\). This proves that for any statistic, \(\hat{p}_0\). we get that

\[\hat{p}_0 \cdot (1- \hat{p}_0) \leq \frac{1}{4}.\]

This now gives us that \[n = \left( z^* \right)^2 \frac{ \hat{p}_0 \cdot (1- \hat{p}_0)}{\text{MOE}^2} \leq \frac{ \left( z^* \right)^2}{4 \cdot \text{MOE}^2}.\]

Theorem 9.5 To obtain a confidence interval for a population proportion with a margin of error now larger than \(\text{MOE}\) at a confidence level of \(\text{C.Level}\), you should use a Minimum Sample Size of at least \[\frac{ \left( z^* \right)^2}{4 \cdot \text{MOE}^2} \geq n\] where \(n\) is the theoretical minimum sample size without our removal of the \(\hat{p}_0\) terms.

To fulfill the conditions of Theorem 8.2, we need to ensure that there are at least 10 “successes” and at least 10 “failures” in our sample. However, as this condition can not be verified until after the sample has been gathered and analyzed, it is a sticky topic to interlace with Theorem 9.5.

However, we simply reiterate, that any sample used for running inference on a population proportion should contain at least 10 individuals with the desired trait and at least 10 individuals without the desired trait.

This code chunk gives a way to use R to calculate the minimum sample size, here denoted minSampleSize, using the formula in Theorem 9.5. We have also invoked the R function ceiling() which will round up the value of minSampleSize to give an integer value.

#Desired Margin of Error

MOE =

#Confidence Level

C.Level =

#Do not change anything below this line

zStar <- qnorm( 1 - (1 - C.Level) / 2 )

minSampleSize <- ceiling( zStar^2 / ( 4 * MOE^2 ) )

minSampleSize #Outputs the minimum sample sizeExample 9.8 We found a confidence interval for the population proportion of primary colored M&M candies in Example 9.7. In that example we had a sample size which was actually below the threshold of Theorem 9.4. Regardless, we found a margin of error of over 24.3% due to the exceptionally small sample size. If we wanted a confidence interval with a smaller margin of error, we would need to increase the sample size based on Theorem 9.5.

#Desired Margin of Error

MOE = 0.1

#Confidence Level

C.Level = 0.95

#Only change values above this line

zStar <- qnorm( 1 - (1 - C.Level) / 2 )

minSampleSize <- ceiling( zStar^2 / ( 4 * MOE^2 ) )

minSampleSize## [1] 97This says that the minimum sample size needed to achieve a margin of error of \(0.1\) at the 95% confidence level is 97.

If we choose a confidence level near \(95\%\), we get that \(z^* \approx 2\) so that \(\frac{\left(z^*\right)^2}{4} \approx 1\). More accurately, the confidence level given by pnorm( 2 ) - pnorm( -2 ) would give that \(z^* = 2\) and simplify the above formula to

\[\frac{1}{\text{MOE}^2} \geq n.\] The exact confidence level for this simplification is below.

pnorm( 2 ) - pnorm( -2 )## [1] 0.9544997Theorem 9.6 If we want to consider confidence levels of approximately \(95\%\),132 then we can use the formula

\[\frac{1}{\text{MOE}^2}\]

for our minimum sample size which can be found with even a basic function calculator. We will call this the Minimum Sample Size Rule of Thumb.

We summarize the Minimum Sample Size Rule of Thumb with a couple of explicit examples.

If you want to be \(95\%\) confident that a sample mean is within 0.1 of the population mean, then you should have at least 100 people in the sample.

If you want to be \(95\%\) confident that a sample mean is within 0.01 of the population mean, then you should have at least 10000 people in the sample.

If you want to be \(95\%\) confident that a sample mean is within 0.001 of the population mean, then you should have at least 1000000 people in the sample.

Example 9.9 For example, if we revisit Example 9.8 above, we can get \(n\) by simply calculating \(\frac{1}{0.1^2}\) which is \(\frac{1}{0.1^2} = \frac{1}{0.01} = 100\) which is very close to the value we found initially. The slightly higher value of \(n\) does give us the slightly higher confidence level of \(95.45\%\) compared to the \(95\%\) we used in Example 9.8.

Example 9.10 To see how the margin of error affects the minimum sample size needed, we can redo Example 9.8 but change the desired margin of error to be \(0.01\).

#Desired Margin of Error

MOE = 0.01

#Confidence Level

C.Level = 0.95

#Only change values above this line

zStar <- qnorm( 1 - (1 - C.Level) / 2 )

minSampleSize <- ceiling( zStar^2 / ( 4 * MOE^2 ) )

minSampleSize## [1] 9604This says that the minimum sample size needed to achieve a margin of error of \(0.01\) at the 95% confidence level is 9604.

We can also compare this to the Minimum Sample Size Rule of Thumb.

1/MOE^2## [1] 10000This shows that the rule of thumb gives a value that is very consistent with the results of Theorem 9.5.

Comparing this example to Example 9.8, we see that each sample size found here is one hundred times the values found before for MOE = 0.1.

If we have a sample with a certain margin of error at a specific confidence level, to reduce the margin of error by a factor of \(F\), we need a sample size that is \(F^2\) times larger. For example, if we want to cut the margin of error in half, we need a sample that is four times larger.

If we wanted to gain another decimal of accuracy, we would need to cut the margin of error by a factor of ten which means we would need a sample that is one hundred times larger than the original sample.

9.2.4 Confidence Interval Applications

Example 9.11 (College Population) Early in Part 1 of this chapter, we investigated a university population that was 60% female. However, we never stopped to ask how we obtained the exact value of the parameter. Suppose we had a SRS of 50 students from a university and 28 identified as female. From this sample, what can we confidently say about \(p\)?

If we use the most common confidence level of 95%, we can find this confidence interval with the following code.

#Number of "Good" cases

x = 28

#Sample Size

n = 50

#Confidence Level

C.Level = 0.95

#Only change values above this line

pHat0 <- x / n #Sample proportion

SE <- sqrt( pHat0 * (1 - pHat0) / n )

zStar <- qnorm( 1 - (1 - C.Level) / 2 )

MOE <- zStar * SE #Margin Of Error

CIL <- pHat0 - MOE #Confidence Interval Lower Bound

CIU <- pHat0 + MOE #Confidence Interval Upper Bound

c( CIL, CIU ) #Confidence Interval## [1] 0.4224111 0.6975889The above code shows the all the calculations being made individually. It is possible to make the calculations for CIL and CIU in fewer lines of code, but they would be necessarily more complicated.

Here at Statypus, we try to write code that can be understood as easily as possible and which saves parts of the calculations to your environment if they want to be looked at.

To summarize, this means from our small sample that our single best guess, or point estimate is 0.64, but we have very little confidence (likely 0%), that \(p\) is exactly 0.64. However, we have 95% confidence that CIL<\(p\)<CIU or \(0.4224111 <p < 0.6975889\) or that \(p\) is approximately between 42.2% and 69.8%.

All of the values above are stored in memory and not automatically displayed to the reader other than the final output of CIL and CIU. However, to find the value of any of other terms, you only need to type the name into RStudio and execute it. For example, to find the Margin Of Error, we can simply run the line MOE.

MOE## [1] 0.1375889This states that for our confidence interval, the margin of error is approximately 13.8%.

We have a 95% chance that our sample will yield a “good” confidence interval (i.e. one that contains \(p\)) and we indeed get that \(0.4224111 <p < 0.6975889\). If we want to add confidence, we know from before that we should expect the margin of error to also increase (unless we also altered the sample size, which we won’t do here). Since we are only considering our one sample, the values of \(n\) and \(\hat{p}_0\) will not change and therefore the value of the Standard Error,

\[SE = \sqrt{ \frac{\hat{p}_0 \cdot (1-\hat{p}_0)}{n}},\] does not change.

The value of \(z^*\), however, is calculated directly from the confidence level. If we choose to set the confidence level to 99%, we can find the new value of \(z^*\) using the code below.

C.Level = 0.99

zStar <- qnorm(1-(1-C.Level)/2) #Critical z-score for this confidence level

zStar## [1] 2.575829That is we get that if the confidence level if 0.99, or 99%, that \(z^* \approx 2.6\) versus the approximate value of 2 we had for 95% confidence. This will give a wider confidence interval than we had before. We first find the new Margin Of Error, or MOE, with the relevant line of code and display it.

MOE <- zStar * SE

MOE## [1] 0.1808225We can find the confidence interval with the last few lines of code that followed the calculation of MOE.

CIL <- pHat0 - MOE #Confidence Interval Lower Bound

CIU <- pHat0 + MOE #Confidence Interval Upper Bound

c( CIL, CIU ) #Confidence Interval## [1] 0.3791775 0.7408225That is, we are 99% confident that \(p\) is between 37.9% and 74.1% which has a margin of error of 0.1808225. This was done with a sample of size only \(n=50\). We could increase our precision if we had a larger sample.

As we discussed earlier, we can increase confidence with a given sample or a sample of the same size, but the penalty is a less precise confidence interval.

Using Example 9.7 as your guide, find a 95% confidence interval for the population proportion of primary colored M&M candies if you use a sample from a standard size bag and observe 246 primary colored M&M candies out of 506 total M&M candies. How does the larger sample impact the confidence interval? What if you increase the confidence level to 98%?

Example 9.12 (What proportion of babies born in the US are girls?) According to the 2000 National Vital Statistics Report, the number of boys born in the US in 2000 was 2,076,969 and the number of girls born was 1,981,845133. This sample shows that \(\hat{p}_0 = \frac{1981845}{1981845 + 2076969} \approx 0.4883\) which is close to the 50% that a lot of people would guess. Is it possible that the actual population proportion, \(p\), is actually 0.5? Our first method for attacking this question is to find a confidence interval for \(p\) given our sample.

b = 2076969

g = 1981845

x = g

n = b+g

pHat0 <- x / n #Sample proportion

pHat0## [1] 0.4882818This gives us our setup and shows the approximation of \(\hat{p}_0\), 0.4882818. We can now find confidence intervals with confidence levels of 0.9, 0.95, and 0.99 by using our code above.

C.Level = 0.9

SE <- sqrt( pHat0 * (1 - pHat0) / n )

zStar <- qnorm( 1 - (1 - C.Level) / 2 )

MOE <- zStar * SE #Margin Of Error

CIL <- pHat0 - MOE #Confidence Interval Lower Bound

CIU <- pHat0 + MOE #Confidence Interval Upper Bound

c( CIL, CIU ) #Confidence Interval## [1] 0.4878737 0.4886899C.Level = 0.95

SE <- sqrt( pHat0 * (1 - pHat0) / n )

zStar <- qnorm( 1 - (1 - C.Level) / 2 )

MOE <- zStar * SE #Margin Of Error

CIL <- pHat0 - MOE #Confidence Interval Lower Bound

CIU <- pHat0 + MOE #Confidence Interval Upper Bound

c( CIL, CIU ) #Confidence Interval## [1] 0.4877955 0.4887681C.Level = 0.99

SE <- sqrt( pHat0 * (1 - pHat0) / n )

zStar <- qnorm( 1 - (1 - C.Level) / 2 )

MOE <- zStar * SE #Margin Of Error

CIL <- pHat0 - MOE #Confidence Interval Lower Bound

CIU <- pHat0 + MOE #Confidence Interval Upper Bound

c( CIL, CIU ) #Confidence Interval## [1] 0.4876427 0.4889209Notice how the width of the confidence “moves with” the confidence level. That is, if we increase the confidence level, we find a wider confidence interval or equivalently an increased margin of error. Equivalently, a decrease in the confidence level corresponds to a decrease in the margin of error.

Example 9.13 (Melody's Confidence) We can return to the confident and head strong little girl, Melody, and her struggles learning which shoe was which. Using the above techniques, we can find a 95% confidence for Melody’s “underlying” success rate of \(p\). That is, we are trying to estimate what the value of \(p\) would be if Melody was somehow frozen in time/age and she was allowed to put her shoes on an infinite number of times.

The actual calculation of the confidence interval is simply a matter of modifying the code chunk we have been using.

#Number of "Good" cases

x = 13

#Sample Size

n = 40

#Confidence Level

C.Level = 0.95

#Only change values above this line

pHat0 <- x / n #Sample proportion

SE <- sqrt( pHat0 * (1 - pHat0) / n )

zStar <- qnorm( 1 - (1 - C.Level) / 2 )

MOE <- zStar * SE #Margin Of Error

CIL <- pHat0 - MOE #Confidence Interval Lower Bound

CIU <- pHat0 + MOE #Confidence Interval Upper Bound

c( CIL, CIU ) #Confidence Interval## [1] 0.1798518 0.4701482This means we are 95% confident that Melody’s success “parameter” is between 0.1798518 and 0.4701482. That is, we are 95% confident that Melody would NOT succeed as often as a blind ape in a dark room!

We can increase the confidence to see if the wider interval would contain the value of \(p= 0.5\) that we assigned to BADRs.

#Number of "Good" cases

x = 13

#Sample Size

n = 40

#Confidence Level

C.Level = 0.99

#Only change values above this line

pHat0 <- x / n #Sample proportion

SE <- sqrt( pHat0 * (1 - pHat0) / n )

zStar <- qnorm( 1 - (1 - C.Level) / 2 )

MOE <- zStar * SE #Margin Of Error

CIL <- pHat0 - MOE #Confidence Interval Lower Bound

CIU <- pHat0 + MOE #Confidence Interval Upper Bound

c( CIL, CIU ) #Confidence Interval## [1] 0.1342429 0.5157571This shows that we cannot say we are 99% confident that \(p\) isn’t 0.5 because \(0.1342429 < 0.5< 0.5157571\)! So while we were 95% confident that Melody wasn’t as accurate as a BADR, we are not 99% confident of the same fact. To be able to understand just what level of evidence we have against Melody will require the tools of Section 9.3.

Example 9.14 (The Unfair Coin) Imagine we flipped a coin 50 times and that it produced 32 heads. Using just this, what can be confidently said about the coin’s true proportion of heads, \(p\). Running the code chunk we have been working with yields the following.

#Number of "Good" cases

x = 32

#Sample Size

n = 50

#Confidence Level

C.Level = 0.95

#Only change values above this line

pHat0 <- x / n #Sample proportion

SE <- sqrt( pHat0 * (1 - pHat0) / n )

zStar <- qnorm( 1 - (1 - C.Level) / 2 )

MOE <- zStar * SE #Margin Of Error

CIL <- pHat0 - MOE #Confidence Interval Lower Bound

CIU <- pHat0 + MOE #Confidence Interval Upper Bound

c( CIL, CIU ) #Confidence Interval## [1] 0.5069532 0.7730468This says that we are 95% confident that \(0.5069 < p < 0.7731\) meaning that there is 95% confidence in the fact that \(p>0.5\) which would mean the coin is unfair.

However, if we switch to a 97.5% confidence interval and repeat the calculations, we get the following.

#Number of "Good" cases

x = 32

#Sample Size

n = 50

#Confidence Level

C.Level = 0.975

#Only change values above this line

pHat0 <- x / n #Sample proportion

SE <- sqrt( pHat0 * (1 - pHat0) / n )

zStar <- qnorm( 1 - (1 - C.Level) / 2 )

MOE <- zStar * SE #Margin Of Error

CIL <- pHat0 - MOE #Confidence Interval Lower Bound

CIU <- pHat0 + MOE #Confidence Interval Upper Bound

c( CIL, CIU ) #Confidence Interval## [1] 0.4878485 0.7921515This says that we are 97.5% confident that \(0.4878 < p < 0.7922\) meaning that we are NOT 97.5% confidence in the fact that \(p>0.5\), i.e. the coin is unfair. This example shows that we are somewhere in between 95% and 97.5% confident that the coin is unfair, but we do not yet have the techniques to fully understand this. The technique of a hypothesis test will allow us to determine an explicit measure of how much confidence we have in the fact that \(p>0.5\).

Review for Chapter 9 Part 2

Inference for Population Proportion(s) Quiz: Part 2

This quiz tests your understanding of Confidence Intervals for a Population Proportion. The answers can be found prior to the Exercises section.

- True or False: A confidence interval provides a range of plausible values for the sample proportion, \(\hat{p}\).

- True or False: The Margin of Error (MOE) is subtracted from and added to the point estimate, \(\hat{p}_0\), to create the confidence interval.

- True or False: When constructing a confidence interval for the population proportion, \(p\), the point estimate of the parameter is the observed sample proportion, \(\hat{p}_0\).

- True or False: For a fixed sample size, increasing the confidence level will decrease the Margin of Error (MOE), thereby increasing the precision of the interval.

- True or False: Since the population proportion \(p\) is unknown for a confidence interval, the Standard Error (SE) uses the observed sample proportion \(\hat{p}_0\) in its formula: \(\text{SE} = \sqrt{\frac{\hat{p}_0(1-\hat{p}_0)}{n}}\).

- True or False: The critical \(z\)-value, denoted \(z^*\), is determined by the desired confidence level and is used to calculate the Margin of Error for a confidence interval for a population proportion.

- True or False: The Success/Failure Condition for a 1 sample proportion confidence interval is satisfied if the expected number of successes (\(n \cdot \hat{p}\)) and the expected number of failures (\(n \cdot (1 - \hat{p})\)) are both at least 10.

- True or False: The final confidence interval is calculated by taking the point estimate and adding/subtracting the Margin of Error, where \(\text{MOE} = z^* \cdot \text{SE}\).

- True or False: The standard error (SE) is a statistic that serves as our best estimate for the true standard deviation of a sampling distribution.

- True or False: The \(5\%\) Condition is necessary to ensure independence, and this condition means the sample size (\(n\)) must be less than five percent of the population size (\(N\)).

Definitions

Section 9.2.2

Definition 9.5 \(\;\) Given a sample with a sample proportion of \(\hat{p}_0\) from a population being measured for a certain trait, we say that the Standard Error of The Distribution of Sample Proportions or simply the Standard Error attributed to the sample proportion \(\hat{p}_0\) is the value

\[\text{SE} = \sqrt{\frac{\hat{p}_0\cdot( 1-\hat{p}_0)}{n}}\] which is an approximation to the (likely) unknown value of

\[\sigma_{\hat{p}} = \sqrt{\frac{ p \cdot( 1-p)}{n}}.\] We will treat the standard error like a standard deviation because it is a point estimate of one.

Definition 9.6 \(\;\) When discussing a confidence interval, the Confidence Level is a value between 0 and 1 that states how often the procedure used will give an interval that captures the parameter. I.e. how often the parameter falls between the values which define the confidence interval.

Definition 9.7 \(\;\) Given a confidence level of \(C\), we define the Critical \(z\)-Value, which we denote \(z^*\) (which we assume to be positive), to be the value such that

\[P ( - z^* < z \leq z^*)=C.\] We may talk of either \(z^*\) or the pair \(\pm z^*\) as being the critical \(z\)-value(s).

Definition 9.8 \(\;\) We define the Margin of Error for a Confidence Interval for a Population Proportion to be given by \[\text{MOE} = z^* \cdot \sigma_{\hat{p}},\] but in practice we are forced to accept the approximation of \[\text{MOE} \approx z^* \cdot \text{SE}.\]

Results

Section 9.2.2

Theorem 9.4 \(\;\) Here we summarize our results and calculations for the confidence interval for a population proportion. If \(n \cdot \hat{p} > 5\), \(n \cdot ( 1 - \hat{p}) >5\), and \(n << N\), then we can find a confidence interval as shown below.

\[\begin{align*} \text{Conf. Int.} &= \left(\text{Pt. Est.} \pm MOE\right)\\ &= \left( \hat{p}_0 \pm z^* \cdot SE \right)\\ & = \left(\hat{p}_0 \pm z^* \cdot \sqrt{\frac{\hat{p}_0(1-\hat{p}_0)}{n}}\right) \end{align*}\]

where

\[\begin{align*} \text{Pt. Est.} & = \text{A point estimate of the parameter.}\\ \hat{p} &= \text{Sample Proportion of Success}\\ \hat{p}_0 &= \text{Observed value of }\hat{p} = \frac{x}{n}\\ x &= \text{Number of Successes}\\ n &= \text{Sample Size}\\ N &= \text{Population Size}\\ MOE &= \text{Margin Of Error} = z^* \cdot SE\\ \text{C.Level} &= \text{Desired Confidence Level}\\ z^* & = \text{zStar} = \text{qnorm( 1 - (1 - C.Level) / 2 )}\\ SE &= \text{Standard Error} = \sqrt{\frac{\hat{p}_0 \cdot (1-\hat{p}_0)}{n}}. \end{align*}\]

The code to make all of these calculations is contained in the Code Template below.

Section 9.2.3

Theorem 9.5 \(\;\) To obtain a confidence interval for a population proportion with a margin of error now larger than \(\text{MOE}\) at a confidence level of \(\text{C.Level}\), you should use a Minimum Sample Size of at least \[\frac{ \left( z^* \right)^2}{4 \cdot \text{MOE}^2} \geq n\] where \(n\) is the theoretical minimum sample size without our removal of the \(\hat{p}_0\) terms.

Big Ideas

We want to make as confident of a prediction as we can about \(p\) based on a particular value of \(\hat{p}\).

Section 9.2.1

To illuminate the idea between precision and confidence, let’s consider a simple carnival game of trying to hit a bullseye with a dart from a certain distance. There seems to be a give and take between the precision of the shot needed and the confidence of the thrower. If we think of the radius of the bullseye as the margin or error granted the thrower, then it is clear that as we increase the margin of error, i.e. a larger target, that the confidence of the thrower should increase.

To make this more explicit, imagine you are standing at a standard dart board from any length. The inner or double bullseye has a radius, or margin of error, of only 0.25 inches. Try to mentally guess your probability of hitting this small target if you were given three attempts. Would you be willing to wager $1 that you could do it?

Now imagine that the game is simply whether you can hit the scoring region of the dartboard as a whole from the same distance as with the double bullseye. This has a radius, or margin of error, of just under 9 inches. Would you be willing to make the $1 wager now?

Regardless of your confidence at hitting the double bullseye, it should be true that you had more confidence at hitting the scoring region of the board. You still may not want to take the bet, but you should have had more confidence in hitting the larger target.

For any given sample size, we get that \[(\text{Confidence} \uparrow) \Leftrightarrow (\text{Margin of Error} \uparrow) \Leftrightarrow (\text{Precision} \downarrow)\]

or logically equivalently that

\[(\text{Confidence} \downarrow) \Leftrightarrow (\text{Margin of Error} \downarrow) \Leftrightarrow (\text{Precision} \uparrow).\]

Section 9.2.2